Purpose

- Algorithmic integrity means designing, implementing, and maintaining algorithms that are transparent, fair, accountable, and aligned with your company's values.

- Algorithmic decisions you make today will shape your company's future and impact thousands, millions or billions of users.

- Algorithmic integrity isn't just avoiding negative headlines—it's building systems that create genuine value while respecting human dignity and fairness. It's ensuring your automated decision-making systems build trust with users, investors and all stakeholders, and don’t just serve short term returns.

- Building algorithmic integrity into your systems from day one is far easier and cheaper than retrofitting later. It also helps you avoid costly the kind of regulatory issues and PR disasters that kill early-stage companies.

Method

- Mark brightlines

Create a simple algorithm ethics charter—a one-page document that outlines your commitment to fairness, transparency, and accountability. This becomes your North Star for algorithmic decisions. To do this, define your company's core values and how they translate to algorithmic decision-making—ideally before writing a single line of code. What outcomes do you want your algorithms to optimize for? What outcomes do you want to explicitly avoid? Document these principles and make them accessible to every team member. - Hire Well

Hire a technical team with a range of skills, including: Pytorch/tensorflow and other modern machine learning libraries; capabilities around using and deploying frontier models (external, open-source); scikit-learn for core ML algorithms and evaluation metrics; pandas/numpy for fata manipulation and analysis; matplotlib/seaborn for basic data visualization; SHAP for model explainability and feature importance; Fairlearn for fairness assessment and mitigation; MLflow for experiment tracking and model management; Great Expectations for data validation and quality; AIF360 for advanced fairness metrics and algorithms; Kubeflow/MLflow for end-to-end ML pipeline orchestration; Apache Kafka for real-time data streaming for monitoring - Document efforts

Document your algorithmic decision-making processes. Keep records of what data you use, how you process it, what assumptions you make, and how you evaluate performance. This documentation becomes invaluable for debugging bias, improving performance, and demonstrating accountability. - Lay clear groundwork

Good algorithms require good data, and good data requires good governance. Create clear policies for data collection, storage, and usage. Implement data quality checks and regular audits. Ensure you have proper consent for data usage and clear retention policies. - Consider user groups

Understand that algorithms affect user groups differently. Map out your user profiles/personas across dimensions like age, gender, race, socioeconomic status, geography, and ability and ask: How might this algorithm impact them differently? What unintended consequences could emerge? - Mind the data

Pay special attention to training data quality. Biased training data creates biased algorithms. Regularly audit datasets for representation gaps, historical biases, and data quality issues. When possible, collect additional data to fill gaps rather than making assumptions. - Feedback loop

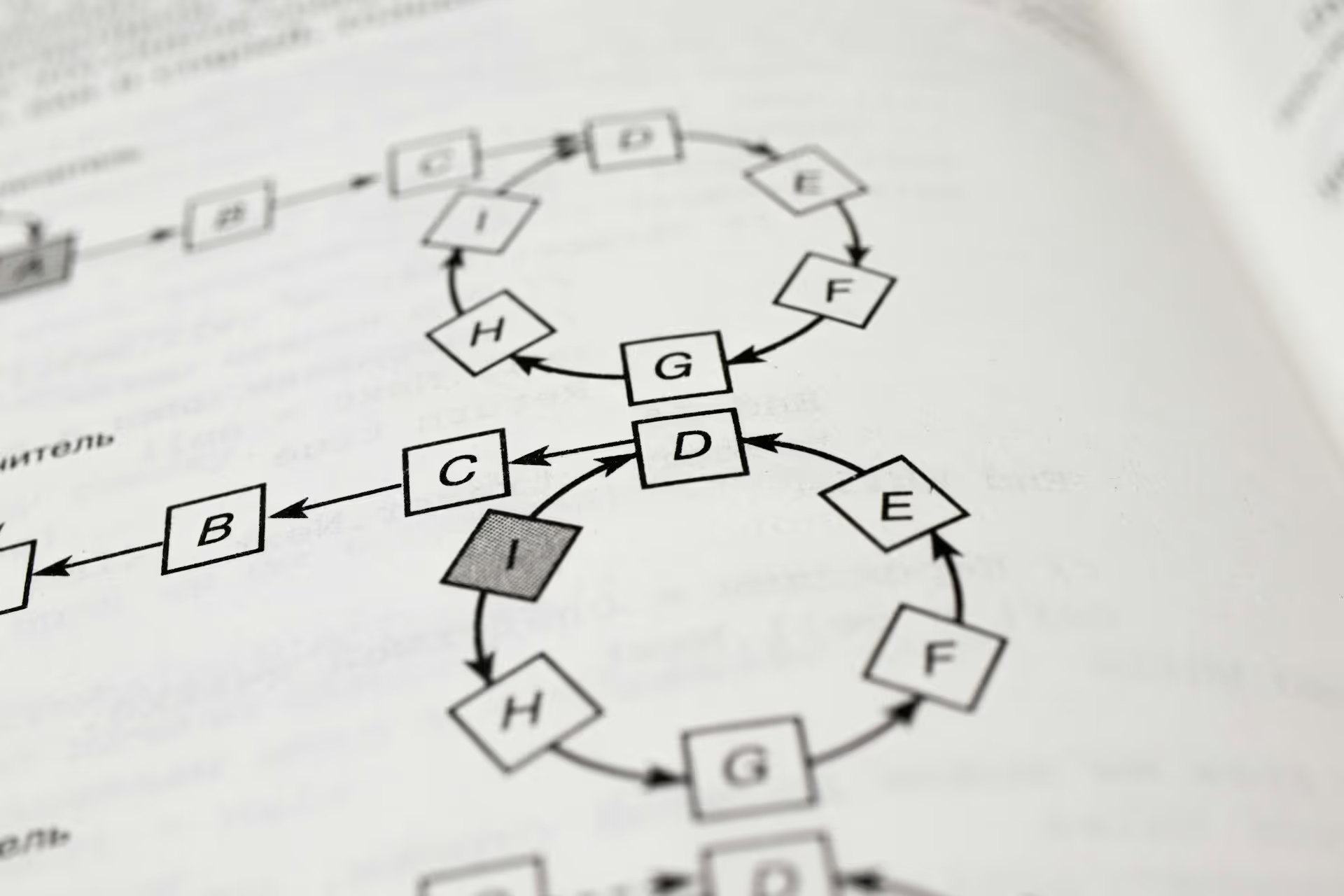

Create mechanisms for users to report problems, appeal decisions, or provide feedback on algorithmic outcomes. This isn't just good customer service—it's essential intelligence for improving your algorithms and catching bias early. - Review

Establish regular review cycles for your algorithms. Monthly or quarterly reviews should examine performance metrics, bias indicators, user feedback, and alignment with company values. Make algorithm review a standard part of your product development process. - Add metrics

Add metrics about algorithmic transparency and user satisfaction to your KPIs. Track all metrics consistently and set improvement targets. Consider metrics like demographic parity (equal outcomes across groups), equalized odds (equal accuracy across groups), and individual fairness (similar individuals receive similar treatment). Choose metrics that align with your specific use case and values.

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Suspendisse varius enim in eros elementum tristique. Duis cursus, mi quis viverra ornare, eros dolor interdum nulla, ut commodo diam libero vitae erat. Aenean faucibus nibh et justo cursus id rutrum lorem imperdiet. Nunc ut sem vitae risus tristique posuere.

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Suspendisse varius enim in eros elementum tristique. Duis cursus, mi quis viverra ornare, eros dolor interdum nulla, ut commodo diam libero vitae erat. Aenean faucibus nibh et justo cursus id rutrum lorem imperdiet. Nunc ut sem vitae risus tristique posuere.

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Suspendisse varius enim in eros elementum tristique. Duis cursus, mi quis viverra ornare, eros dolor interdum nulla, ut commodo diam libero vitae erat. Aenean faucibus nibh et justo cursus id rutrum lorem imperdiet. Nunc ut sem vitae risus tristique posuere.

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Suspendisse varius enim in eros elementum tristique. Duis cursus, mi quis viverra ornare, eros dolor interdum nulla, ut commodo diam libero vitae erat. Aenean faucibus nibh et justo cursus id rutrum lorem imperdiet. Nunc ut sem vitae risus tristique posuere.

Trap Doors

- Retrofitting…

Retrofitting algorithms at the core of a product is dangerous to your market standing – and expensive. Avoid this version of technical debt by starting with simple, interpretable algorithms and add complexity gradually. - Overlooking…

Don’t overlook ancillary tool chains and skills your engineers will need. Build a team that includes proficiency with constraint optimization libraries (cvxpy, scipy.optimize, or similar), experience with multi-objective optimization frameworks (pymoo, optuna, hyperopt), knowledge of fairness-aware ML libraries (Fairlearn, AIF360, Themis), understanding of regularization techniques and how to apply them for fairness goals. - Overindexing…

Don’t focus solely on optimizing for engagement, conversion, or revenue because that can lead to algorithms that exploit human psychology or amplify harmful content. What drives short-term metrics often conflicts with long-term user wellbeing and business sustainability. - Blindspots…

Avoid the “homogenous team blindspot” where teams with similar backgrounds often share similar blind spots which can result in brittle products that break when they expand to serve broader user populations. Get different points of view from employees, advisers, user research or third-party tools. - Uninterpretible..

Don’t create a “black box” problem. As algorithms become more sophisticated, they often become less interpretable. This makes it impossible to understand why decisions are made, debug problems, or ensure fairness. Instead, prioritize interpretable models. When using complex models, invest in explainability tools and techniques. Have a KPI about the ability to understand and explain your algorithm's key decisions. - (Not) one and done…

Change is inevitable, and algorithms aren't set-and-forget systems. They interact with dynamic environments, changing user behavior, and evolving datasets. What works fairly today may become biased tomorrow as conditions change. Implement continuous monitoring and regular retraining schedules. Set up alerts for when algorithm performance or fairness metrics drift beyond acceptable ranges. - Oversimplify…

Don’t overlook the product differentiation opportunity of algorithmic integrity as a competitive advantage. Ethical algorithms often perform better, create more user trust, and open new market opportunities. Treating algorithmic integrity as purely a compliance issue—something to check off a list—misses the strategic value of ethical algorithms. Compliance is the floor, not the ceiling.

Mind the aperture.

What drives short-term metrics often conflics with long-term user wellbeing and business endurance.

Cases

Pymetrics (acquired by Harver) disrupted the $200B recruiting industry by making bias elimination their core product differentiator. Instead of building another resume-screening tool, founders (including a neuroscientist) designed gamified behavioral assessments that evaluate candidates' cognitive and emotional traits without seeing names, schools, or work history (TechCrunch, 2017; Pymetrics.ai, 2023).

Their key product decision: building custom algorithms for each client based on their top performers, then rigorously testing each algorithm for bias before deployment. They open-sourced their bias-auditing tool (Audit-AI) and actively sought third-party audits—unusual transparency that built trust with enterprise clients (Pymetrics.ai; Wharton Human-AI Research, 2024).

The results were clear: 50+ enterprise clients including Accenture and Unilever, with some companies doubling their interview-to-hire conversion rates while increasing diversity. Unilever put 250,000 employees through Pymetrics' assessments (TechCrunch, 2017). The platform helped companies hire candidates they'd never previously considered—including "one who'd never been on a plane before"—while expanding talent pools beyond expensive college graduates (Harvard Digital Innovation and Transformation, 2019).

Making fairness your primary value proposition—not just a compliance checkbox—can open doors to risk-averse enterprise clients and become your strongest competitive moat.

Sixfold attacked the opaque insurance underwriting market with a bold promise: "No black box, everything is transparent." While competitors emphasized speed or accuracy, Sixfold made explainability their core differentiator, providing "full sourcing and lineage of all underwriting decisions" (Sixfold.ai).

Their product architecture reflects this commitment: every risk insight shows complete transparency that compliance teams "will love," and the platform continuously explores new ways to evaluate bias. This transparency-first approach helped them land Zurich's North America Middle Market team as an early adopter for their Narrative feature, which standardizes how risk is communicated across the organization (Sixfold.ai).

By focusing on explainability in a highly regulated industry where a single biased decision could trigger regulatory action, Sixfold positioned itself as the safe choice for enterprise insurers, MGAs, and reinsurers who need to justify every underwriting decision (Sixfold.ai). The company specifically built for isolated environments to protect each client's unique risk appetite—showing how privacy and transparency can coexist.

In regulated industries, radical transparency isn't just about ethics—it's about de-risking adoption for enterprise buyers who fear regulatory penalties more than they desire efficiency gains.

Who to Enlist

Even a junior data scientist can do basic bias detection using existing libraries, conduct simple explainability analysis with SHAP/LIME, do data quality checks using Great Expectations and perform basic statistical testing for fairness metrics.

{{divider}}

Midlevel ML engineers can help with implementing fairness-aware training pipelines, integrating explainability tools into production systems, tracking data drift detection and alerting systems, and performing A/B testing for algorithmic changes.

{{divider}}

Senior ML engineers can take responsibility for end-to-end algorithmic integrity systems, custom fairness optimization frameworks, advanced monitoring and alerting infrastructures, and cross-functional team coordination for ethics implementation.

{{divider}}

Reach outs to external organizations can help you get deeper insight into evolving tools as well as industry norms.